Summary: Game testing researches the notion of fun. Compared with mainstream UX studies, it involves many more users and relies more on biometrics and custom software. The most striking findings from the Games User Research Summit were the drastic age and gender differences in motivation research.

Last week, I attended the Games User Research Summit (GamesUR or GUR), which happened in connection with the Game Developer Conference (GDC), but was hosted separately at Electronic Arts (EA) in Silicon Valley — as you can tell, these guys love their acronyms.

With EA being the gracious hosts, the conference happened under the watchful eyes of an enormous dragon and the break room was festooned with large posters of the classic Star Wars characters. It was clear just from the surroundings that we were not in Kansas anymore. (Or, rather, not in the realm of mainstream UX. Here really be dragons.)

It was clear from the terminology bantered around in the talks that games are different from other design projects. Take, for example, the game Rainbow Six Siege (inevitably abbreviated as R6S, because they do like acronyms). One of the UX metrics tracked during the testing of this game was the kill/death ratio, which, admittedly, is not one of the things we teach in our otherwise comprehensive Measuring User Experience seminar. (This ratio is the number of opponents you kill divided by the number of your team members who die during a death match. Another term we don’t use much in mainstream design projects.)

Much Remains the Same

Despite the dragon and the death matches, I actually saw many similarities between the games user-experience (GUX) world and the mainstream UX world.

In a brilliant opening talk, Brandon Hsuing (Director of Insights at Riot Games) explained how he has organized his department of 70 people. A main takeaway was the benefit of embedding UX researchers within product teams, both at the feature-design level, but ideally at the higher level of the complete game. Twenty years ago, at Sun Microsystems, we made the same key point of using a matrix organization where researchers report to a central, specialized group but sit with a product team in a dotted-line relationship.

Since Riot Games has close to 2,000 employees, a department of 70 insight professionals might seem too low for the recommended share of 10% of project teams being allocated to user research. However, because Riot is both a studio (designing and implementing games) and a publisher (distributing and selling games), much of the total staff must be allocated to the publishing side. So an insights team of 70 may actually be close to the recommended 10% of the people actually building the new products.

The main innovation I got from Hsuing’s talk lies in the very name of his department: the Insights Team. The wording may seem like superficial propaganda, but in reality it makes a profound point: the goal of research is to increase company profitability by improving products and raising conversion rates. We can achieve these profitability goals only if the UX teams deliver actionable insights and drive the company’s development activities at both the tactical level (better design) and the strategic level (discovering customer needs and building products to meet and exceed these needs). Most companies’ UX maturity is not even at the tactical level yet, but to reach the strategic level, we do have to don that “insight team” hat.

I was pleased to hear that Riot’s Insights Team encompasses the company’s user research, as well as their market research and analytics. Analytics and UX should be joined at the hip, but are too often separated in different departments. And market research is usually kept even further from UX. This despite the many benefits of integrated customer insights that triangulate findings from multiple methods.

Another presentation that elicited some déjà-vu moments came from Laura Hammond from UEgroup, who talked about testing gesture-based games. She recommended avoiding swivel chairs when testing young children, because kids get too easily distracted by moving around on the chair. True, but an observation we made in 2001 in the first edition of our report from usability testing of children using websites. The kids who were 6 years old in 2001 are now 21 and thus qualified to participate in our current user research with young adults/Millennials. It’s nice to know that the next generation of children is the same, at least when it comes to swivel chairs in the usability lab.

To record the test sessions and get the gestures on video, the researchers recommended using 3 video cameras: from above, from the side, and facing the user. Exactly what we did 20 years ago in the hardware human-factors lab to record system administrators installing hard drives in servers. Testing 3D user interfaces requires more equipment than studying 2D websites.

Of course, Hammond’s talk had also new observations, specific to games for the Intel RealSense camera (which requires users to control the game by moving their hands in front of the camera). For example, the researchers needed to include the users’ hand size as one of the screening criteria when recruiting test participants. We certainly don’t ask about hand size in our screeners, and apparently, it’s a challenge to get it right.

Another insight from Hammond’s talk was that testing 3D gestures introduces yet another opportunity for the study facilitator to bias the user: the very way you sit or move may prime the user to copy aspects of your body language in their gestures.

Multiuser Testing

Unlike mainstream user testing, game research often involves testing with many users in parallel — either because the game takes a long time to play or because it involves multiple players.

(On occasion we do test with multiple users at the same time even in traditional UX projects — for example when running usability studies with young children, but mostly we run one user at a time, because we want to pay close attention to every detail of the user’s behavior. Also, a website visit usually only lasts 2–3 minutes, with a typical page view lasting maybe 30 seconds, so we should aim to study everything in detail.)

Hardcore gamers will often play for hours at a stretch, with much of their time spent repeatedly shooting at something. As a result, playtesting labs around the world seem to be uniformly designed to accommodate 12–20 game testers (or more, for big companies) who play the same game, each at their own console.

Sebastian Long from Player Research in the UK described his company’s playtesting lab: The observation room included a big projection display with reduced versions of 12 users’ screens, as well as a pushbutton switch for observers to select one of the 12 screens to be magnified on a separate monitor for high-resolution observation when one of the testers did something interesting in the game. This need to alternate between surveying many peoples’ broad behavior and detailed attention to a single person’s specific interactions is rare outside games research.

The multiplayer component of many modern games is the second reason for multiuser sessions in game research. Whether several players play together in the same room or across the network in real time, researchers must understand their processes of communication and collaboration. In contrast, in mainstream UX, even when taking into account social media and omnichannel experiences, people rarely work together at the same time with the same interface to solve the same task.

Games researchers often have access to data at true scale: in the case of the R6S kill/death ratio I mentioned above, Olivier Guedon from Ubisoft measured the ratio across 440,000 games during alpha testing and 182M games in two beta-testing rounds. In the alpha, the defending team won 61% of the time, resulting to tweaks making it easier to attack. As a result, the attackers won 58% of the time in the first beta test. Further redesigns finally made the game balanced in the second beta. A great example of iterative design and the common observation that fixing one UX problem (too easy to defend) sometimes introduces a new problem (too easy to attack), which is why I recommend as many rounds of iteration as possible.

Professional Users

In the gaming domain, some companies have to accommodate two classes of users: normal users (who buy the game and play for fun) and professional users who are paid to play the game as an “eSport.” eSports are a big business with huge audiences watching the championship games. (In 2014, Amazon.com paid almost a billion dollars for one eSports site.)

Of course, one of the oldest lessons in traditional user experience is that we need to design for both novice and expert users. Each have different skill levels and need different features. (More on this in our UX Basic Training course — it’s that fundamental a concept.) But professional users take this distinction to an entirely different level and require separate research of what happens when operating a user interface becomes its own goal and the focus of somebody’s career.

Designing Fun

User satisfaction has always been one of the 5 main usability criteria: people will most definitely leave a website that’s too unpleasant. Even in enterprise software, you want users to like your design to reduce employee turnover. That said, mainstream UX research spends much time on other criteria, such as learnability and efficiency, because users are so goal oriented: they go to a website to get something done (say, buy something or read the news), not to have fun with the user interface.

In strong contrast, a game has no purpose other than fun. The stated goal may be to kill the boss. (No, not your manager, but a nasty gremlin or alien invader — these game enemies are referred to as bosses.) But the real goal is to have fun while doing so. That’s why it’s important to study the kill/death ratio: if designers made the game interface too good at killing bosses, that would be efficient, but not fun. (Good traditional UX; bad GUX.) Gamers need just the right level of challenge, because it’s also no fun if you die immediately and don’t get to off some bosses.

In an attempt to pinpoint exactly when users are excited or bored, some GUR researchers employ esoteric biometrics sensors. For example, they measure skin-conductance levels (sweat activity), which is related to physiological arousal. Pierre Chalfoun gave a good overview of biometrics at Ubisoft, and he emphasized that these physiological sensors are not always directly connected to user emotions, which is what we really want to design for. (The goal is engaged users, not sweaty users, even if there is a correlation.)

Chalfoun presented an interesting study of game tutorials, which showed that users’ levels of frustration, as indirectly measured by biometrics, mounted every time they failed to understand a game tutorial. First failure: somewhat frustrated. Third failure in a row: very frustrated. While this finding makes intuitive sense and may not be worth the cost of a biometrics lab, Chalfoun stressed that good visualizations of such data convince management and developers to take research seriously and invest in fixing the bad designs that caused such growing user frustration. (Without quantifiable data, it’s easier to dismiss user frustration as a minor matter that can’t hold up the release schedule.)

More Tech

Across the conference presentations, it was striking how many GUX teams make use of custom-written software. Anything from running the playtest lab to game telemetry (“calling home” with data about live play in a beta test) requires the company to allocate software developers to build special features just for the researchers.

I think there are two reasons that GUX teams seem to be more tech heavy than mainstream UX teams:

- The game researchers are embedded in highly geeky companies with legions of programmers, and it’s their company culture that if you need something, you go build it.

- The many game genres are widely diverging in needs, and thus require custom software to study seriously. In contrast, all websites are all built on top of the browser and require the same types of interactions. This means that it’s actually possible for third-party solutions to offer, say, cloud-based analytics tools that collect most data needed to study a website, thus eliminating the need for custom software.

Age and Gender Differences

The best (but very data-dense) presentation at GamesUR was by Nick Yee from Quantic Foundry. Yee has collected data from 220,000 gamers who completed a survey about what motivates them to play computer games. Motivations clustered into 6 groups: action, social, mastery, achievement, immersion, and creativity. Obviously, different games speak to different motivations: a death-march game will attract gamers motivated by action and social play, whereas a simulation game would be preferred by people interested in immersion and creativity.

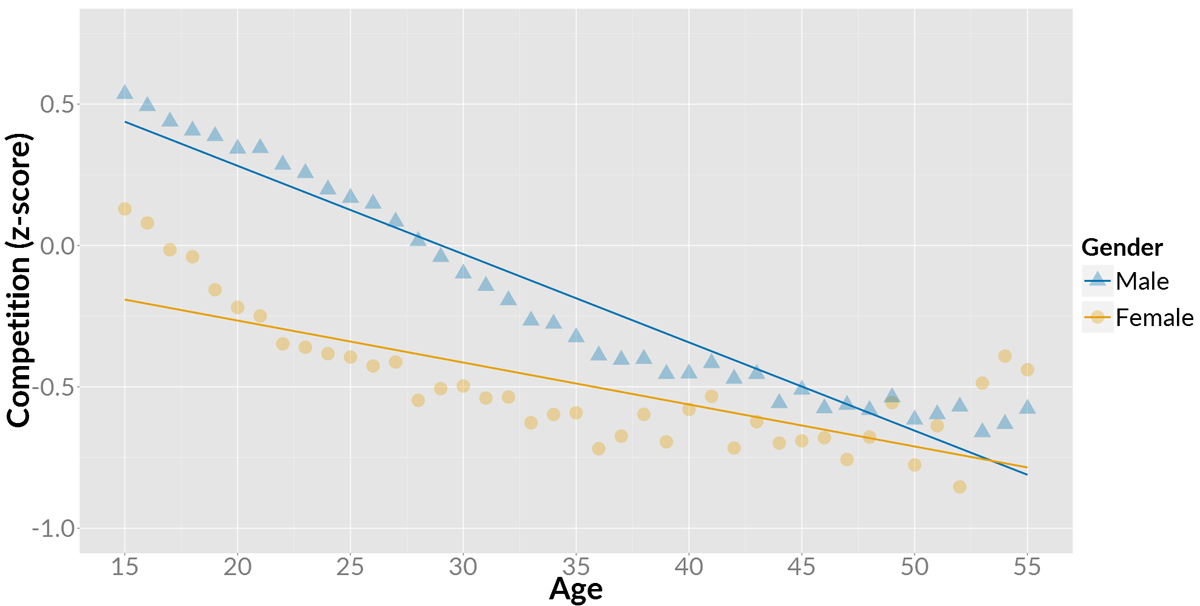

One of the main components of the social cluster is competition. In this cluster gamers care about beating other players and being acknowledged as a high-ranking player (even if they don’t take it to the eSports extreme). The following chart shows the average score on the competition metric for men and women at different ages:

Average gamer scores, expressed as standard deviations from the overall mean across all ages and genders. High scores indicate people who are more motivated by competition. Source: Quantic Foundry, reprinted by permission.

Two observations from the chart:

- Men are more competitive than women. (Or, more precisely, men like competitive games more than women do.) Maybe not a big surprise.

- Competitiveness decreases drastically by age. In fact, the difference between young and old gamers is more than twice the difference between men and women, and by age 50 there’s no real difference between men and women anymore. (Older women might even be more competitive than older men, but there’s too little data in this research to say for sure.)

We sometimes find differences between young and old users in mainstream UX research, but our effect sizes are usually much more modest than those in the Gamer Motivation Study: as users age, task performance using websites declines by 0.8% per year. And it’s almost unheard of to see any reportable differences between male and female users. Say you want to study menu design: the difference between how men and women use any given menu is so negligible that is has zero practical meaning compared to the difference between a design that complies with menu UX guidelines and a poorly designed menu.

In conclusion, the Games User Research Summit was a great conference with many insightful talks by top professionals. Both they and we probably think that mainstream UX and GUR are more different than they really are, but all of us should periodically reflect on the notable similarities between the two fields to make sure that we don’t unduly limit our methods to those traditionally employed in our UX niche. For sure, as persuasive web design becomes increasingly important, mainstream user researchers will need to adopt (and adapt) methods from games user research.

Written by: Jakob Nielsen, Nielsen-Norman Group

Posted by: Situated Research